I’ve had my head down working on SoapBox Snap recently (an open source, free ladder logic editor and runtime for your PC), so I decided it was a good time to come up for air and write a blog post. A lot has happened with Snap since I posted the sneak peek back in July. I’ve flushed out a good ladder logic instruction set, online debugging is working, and you can now execute the runtime as a Windows service, so it’ll keep running your logic in the background, and even auto-start when Windows starts.

It’s been a long time to get a first version out the door, but it’s always been the plan to adopt an agile workflow after release. That is, short release cycles and continuous small improvements. In fact, that’s what I’m going to talk about… why not to use agile releases during the initial development.

The image above is currently my September calendar picture at work, which made me think of this. Please click on the image to go to Despair Inc. and take a look at their stuff. It’s hilarious.

Writing SoapBox Snap took a lot of planning and design:

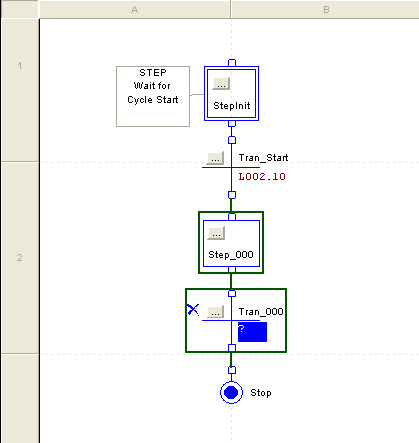

I started by tackling online programming. Downloading the entire application every time there’s a change won’t scale as the program grows. That is, how do you design a data structure such that I can modify it locally, generate a packet of data that only contains the difference between this version and that last version, transfer that change over a communication channel, and reconstruct the new version on the other end given the previous version and the difference? I created a library for building this type of data structure, and the communication protocols to make it work, and I packaged it in a library called SoapBox.Protocol.Base. Then I build a data structure for automation programs on top of that and put it in a library called SoapBox.Protocol.Automation. If you follow standard software architecture terminology, I now had my “Model”.

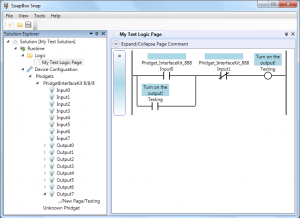

Then I decided to tackle how to make an extensible editor and runtime. I wanted other people to be able to extend SoapBox Snap with new ladder instructions and other features, so extensibility had to be built-in from the ground up. After looking at the various technologies, I settled on .NET’s new Managed Extensibility Framework (MEF), which was only recently released in .NET 4. After playing with it for a while, I realized that part of what I was building was applicable to anyone making an editor-like application with extension points. I decided to encapsulate the “framework” part of it into a re-usable library called SoapBox.Core, and I released it as open source and posted an article on CodeProject about how to use it. Over several months, people have started downloading, using, and even contributing changes back to SoapBox Core to improve it. We’ve setup a Q&A site for people to ask questions, get help, and give feedback.

Armed with a Model, and a Framework, I set off to build SoapBox Snap. At times I made some wrong turns, or started down some dead-ends, but I had a good vision of what I wanted to build. There were nights where I went to bed feeling like I’d banged my head against the keyboard for a few hours and accomplished nothing, but every morning brought a fresh perspective, idea, or insight that helped move the project forward. I didn’t accept any compromises on the core features that affect everything else, like undo/redo. If you don’t get undo/redo right at first, adding it in later means major architectural upheaval.

Ironically, the first problem I solved at a base level, online programming, is only half-implemented in this first version. However, since everything is already there to support full online programming, no major architectural changes have to be made to add it later. My approach was decidedly “bottom-up”. Agile is “top-down”. Would Agile have worked better for this project, or was I right to take a bottom-up approach?

I believe Agile has one failure point: interoperability (and SoapBox Snap is all about interoperability). If you have one closely-knit team doing the development and they’re the only ones that will ever interact with the edges of this application, then I think Agile works well. On the other hand, when you have extensibility points, API’s, or common file formats that 3rd parties are depending on, then doing the kind of massive refactoring that’s required to iteratively change a one-month-old barely-working application into a fully developed one is either going to break the contracts with all 3rd parties, or you’re going to have to support broken legacy interfaces for the rest of your application life cycle. Spending the extra time to build your application bottom-up, and releasing a relatively stable architecture to 3rd parties with working extensions and file formats greatly reduces the friction that Agile development would have caused.

At any rate, we’ve waited long enough. It’s almost over: look for it to be released in early October. I can’t wait to see what people will do with it. 🙂